In the Quantitative Imaging Biomarker Lab (QIB), our research primarily focuses on SPECT/CT and PET/CT imaging, along with radiopharmaceutical therapy dosimetry. We are dedicated to developing cutting-edge methodologies, including but not limited to:

- Imaging hardware

- Imaging physics models

- Statistical iterative reconstruction algorithms

- Digital twins

- Monte Carlo simulation techniques

- Quantitative image analysis methods

- Deep learning-based approaches for enhanced image interpretation

Our goal is to pioneer innovative tools that can transform medical imaging into accurate quantitative biomarkers and ultimately improve patient care.

Below is a list of our ongoing research projects.

SPECT/CT

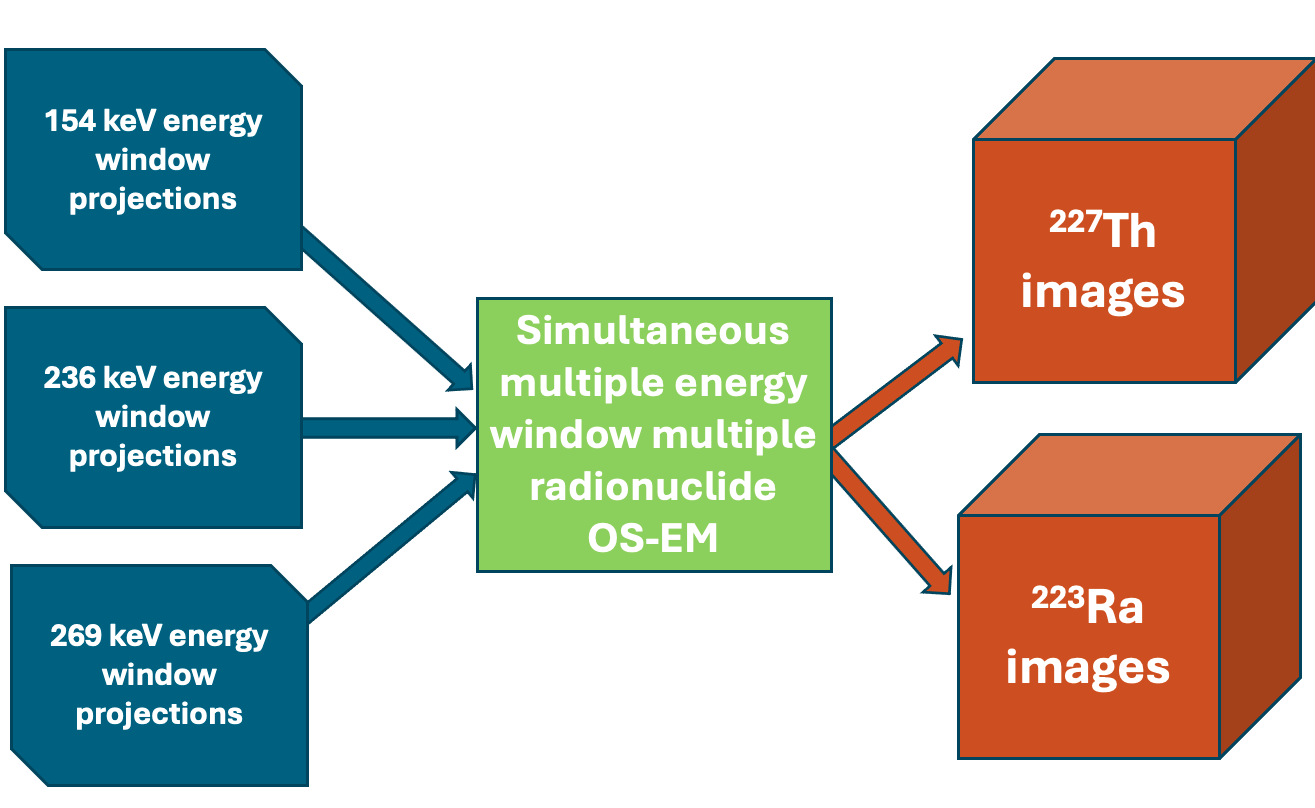

Quantitative multiple radionuclides SPECT reconstruction

We are developing quantitative multi-radionuclide reconstruction algorithms for simultaneously acquired SPECT data. We have created models to address scatter and crosstalk contamination, as well as collimator-detector response functions, which significantly enhance image quality and quantitative accuracy. Applications include 99mTc/201Tl cardiac rest/stress imaging, 99mTc/123I brain imaging, and SPECT imaging of alpha-emitters and their daughters for radiopharmaceutical therapy, among others.

Dr. Du is leading this project.

Grant support: R01EB031023, R01CA239041, U01CA140204, U01EB031798, and P01CA272222

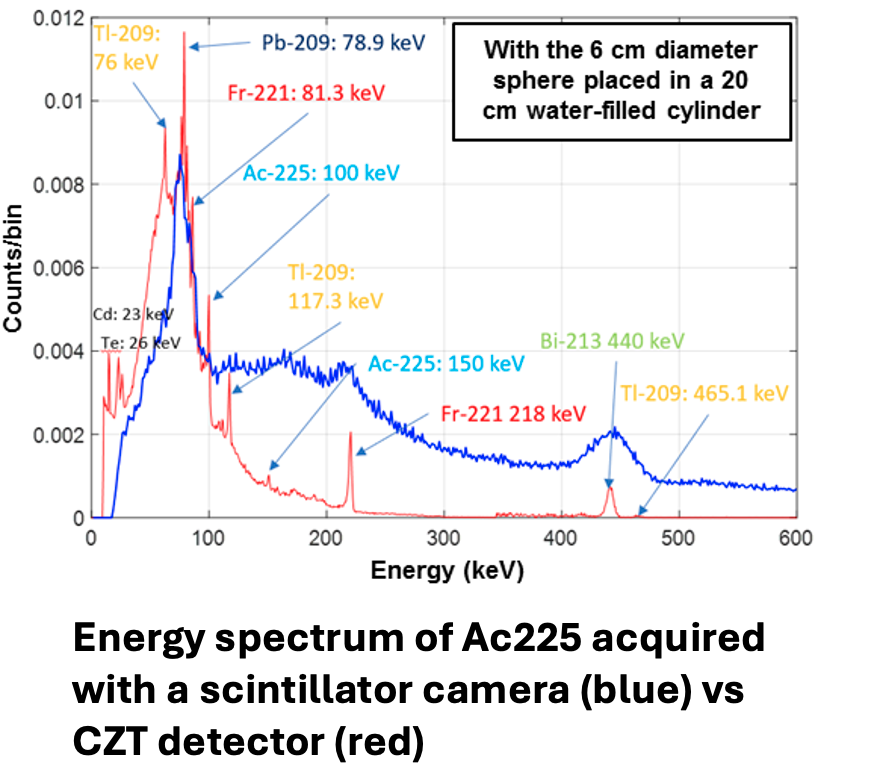

Alpha-SPECT

In this project, we are developing CZT detector-based SPECT systems to achieve high energy resolution, high spatial resolution, and high-sensitivity imaging of alpha emitters and their daughters used in alpha-emitter radiopharmaceutical therapy (RPT). This work is being conducted in collaboration with Dr. Ling-Jian Meng from the University of Illinois Urbana-Champaign (UIUC).

Dr. Du is leading this project.

Grant support: R01EB031023 and U01EB031798

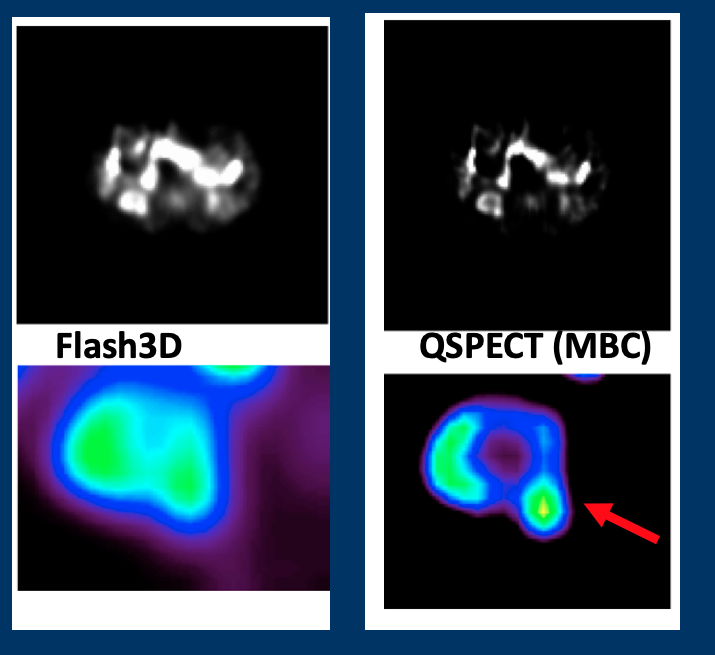

SPECT imaging of renal tumor

Renal mass biopsy, commonly used for pretreatment risk stratification of renal tumors, has an error rate of nearly 20%. 99mTc-sestamibi SPECT imaging has shown the ability to distinguish oncocytoma from other renal tumors. Our goal is to apply and optimize the quantitative SPECT (QSPECT) method specifically for renal 99mTc-sestamibi imaging, focusing on acquisition protocols, reconstruction methods, and quantitative analysis to enhance the reliability of renal SPECT. The overarching objective is to identify and validate reliable quantitative SPECT image biomarkers that can improve the characterization of renal tumors.

Dr. Du is leading this project.

Grant supported: R01EB031023 and P01CA272222

PET/CT

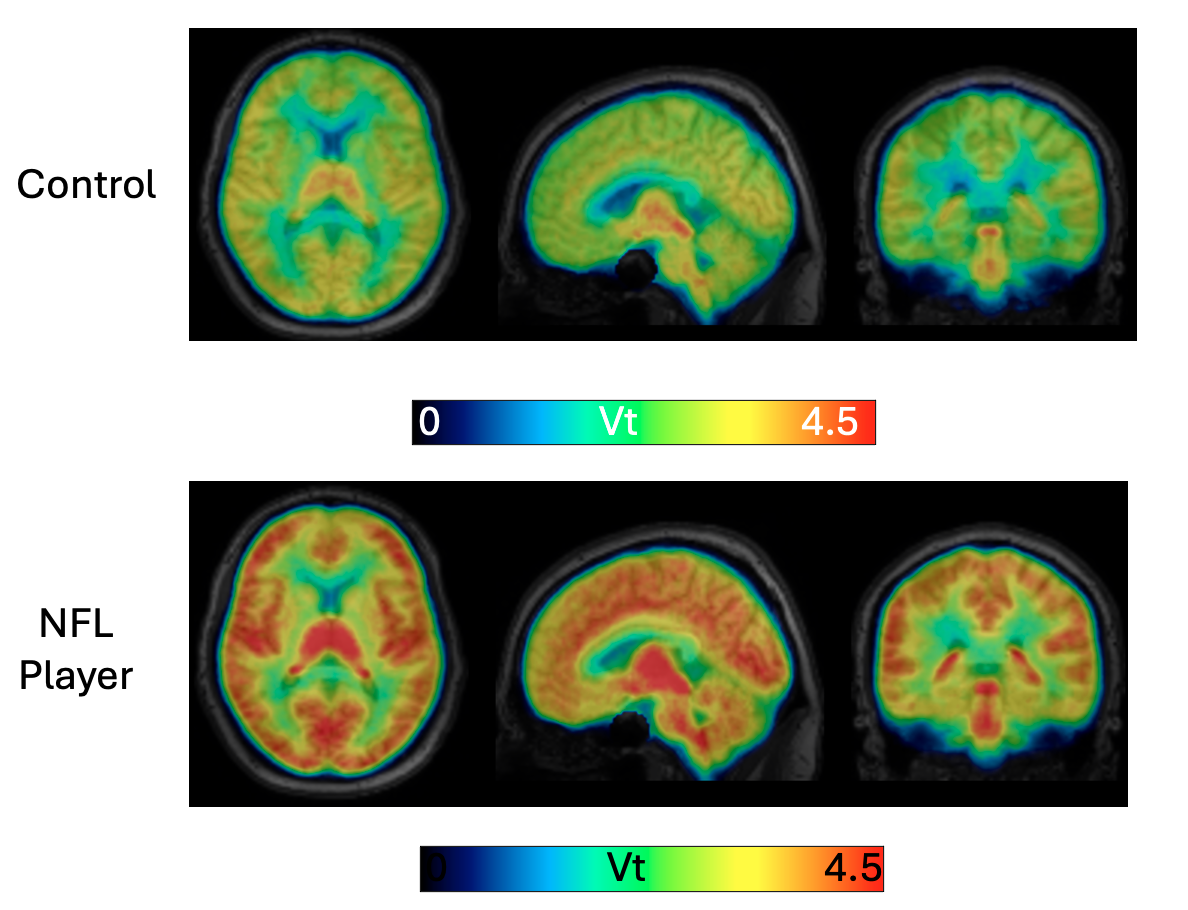

Dynamic PET and tracer kinetic modeling for neurological diseases

We are developing and validating optimized kinetic analysis methods for novel radiopharmaceuticals to image various brain pathologies. Examples of these radiopharmaceuticals include 18F-ASEM, 18F-XTRA, 11C-DPA713, and 11C-CPPC. Applications range from psychosis, HIV, Alzheimer’s disease, and Parkinson’s disease to chronic traumatic encephalopathy (CTE) in NFL players, among others.

Dr. Du is leading this project.

Grant support: R01AG065202, R01MH119947, R21NS128391, R01AG066464, R01NS102006, and R21MH133015

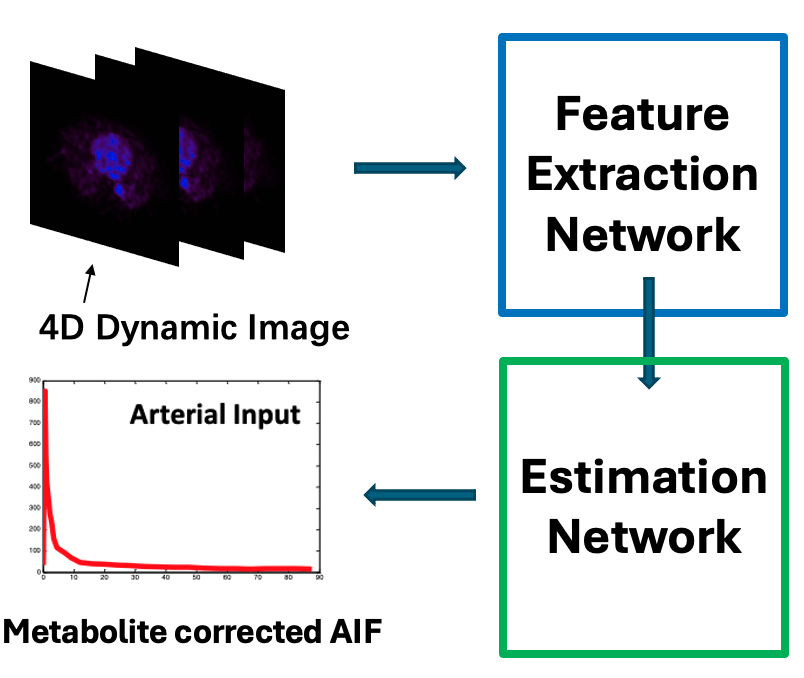

Deep learning arterial input function (DL-AIF)

To avoid the need for blood sampling during dynamic PET imaging, we have developed a deep learning-based method to extract metabolite-corrected arterial input function (AIF) directly from the imaging data. The ability to accurately estimate AIF without arterial sampling will have a significant impact on ongoing and emerging studies. This method will enhance patient comfort, reduce costs associated with anesthesiologist time, blood sampling, and processing (which require personnel and space), and improve recruitment and retention of research subjects in longitudinal studies.

This work is led by Drs. Chen and Du.

Radiopharmaceutical Therapy (RPT) Dosimetry

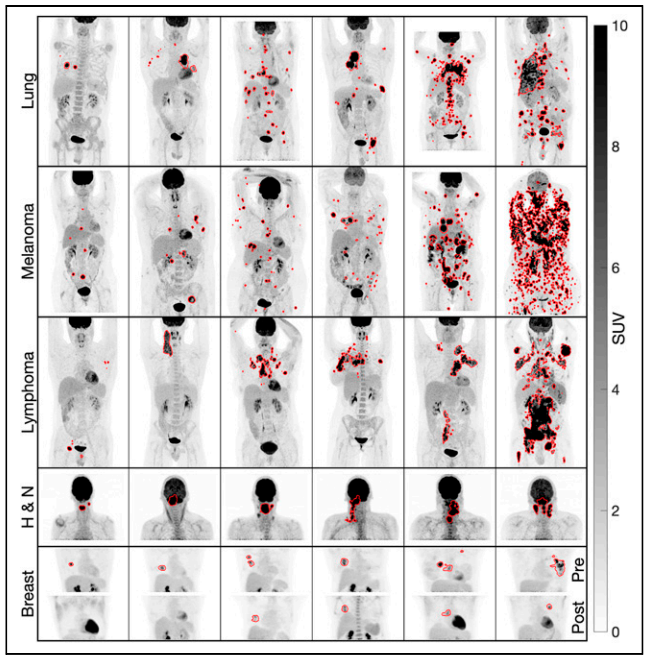

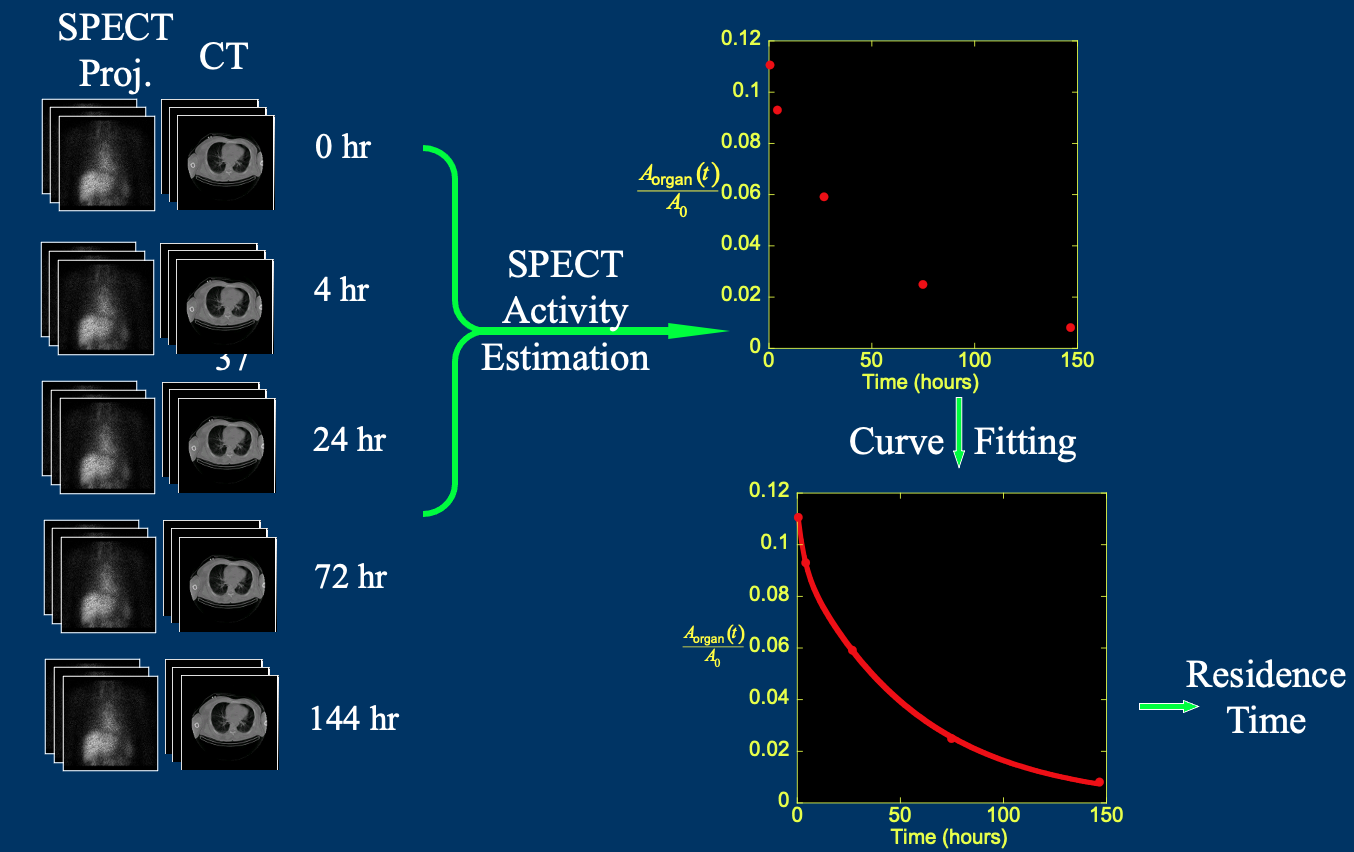

Imaging based dosimetry for radiopharmaceutical therapy

We are working on image-based dosimetry for radiopharmaceutical therapy (RPT). This includes the development of quantitative reconstruction of SPECT images, deep learning (DL)-based image registration and segmentation, and statistical analysis of clinical trial data from various RPTs. The goal is to optimize treatment strategies for improved patient outcomes.

This work is led by Drs. Du and Chen.

Grant support: R01EB031023, U01EB031798, and P01CA272222

Digital Twin

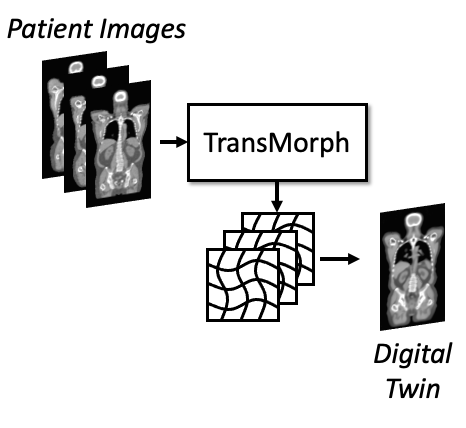

Digital twin for radiopharmaceutical therapy

We are developing digital twins of patients to simulate radiopharmaceutical therapy (RPT). The model uses a deformable registration network to transform a standard label map into patient-specific images, generating organ-level digital twins. Additionally, we incorporate models of sub-organ structures for dose limiting organs such as kidneys, tumor growth and migration, and responses to therapy. The goal is to optimize RPT treatment regimens and monitor therapeutic efficacy for each individual patient.

This work is led by Dr. Chen.

Grant support: P01CA27222 and JHU Discover award.

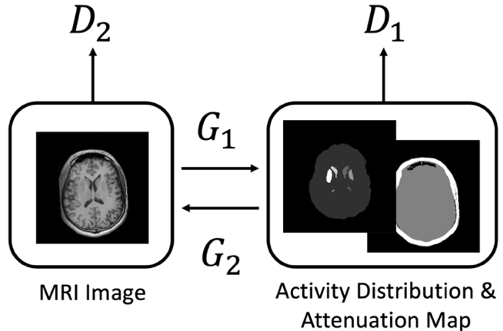

Brain Phantom

A generative adversarial network (GAN) was used to generate synthetic brain MRI images, which were then transformed to model SPECT or PET tracer uptakes and attenuation maps using CycleGAN. This allows us producing a population of brain phantoms realistically model variation and changes seen in clinic. The simulated data from phantom population can be used for validating imaging hardware design, optimizing acquisition protocols, evaluating reconstruction algorithms, as well for generating training data for other deep learning methods.

This work is led by Dr. Leung.

Deep Learning

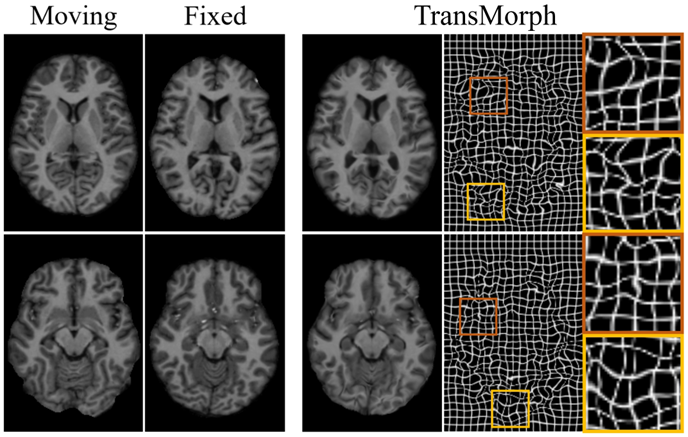

Deep learning-based medical image registration

Image registration plays a key role in enabling population-based analysis of anatomical structures, assessing therapy response, and calculating radiation dosimetry. We are leveraging deep learning to enhance image registration, aiming for fast and precise results across multiple modalities, including MRI, CT, PET, and SPECT.

The methods are available at GitHub. Please check “Links & Software” tab for details.

Dr. Chen is leading this project.

Grant support: P01CA27222 and JHU Discover award.

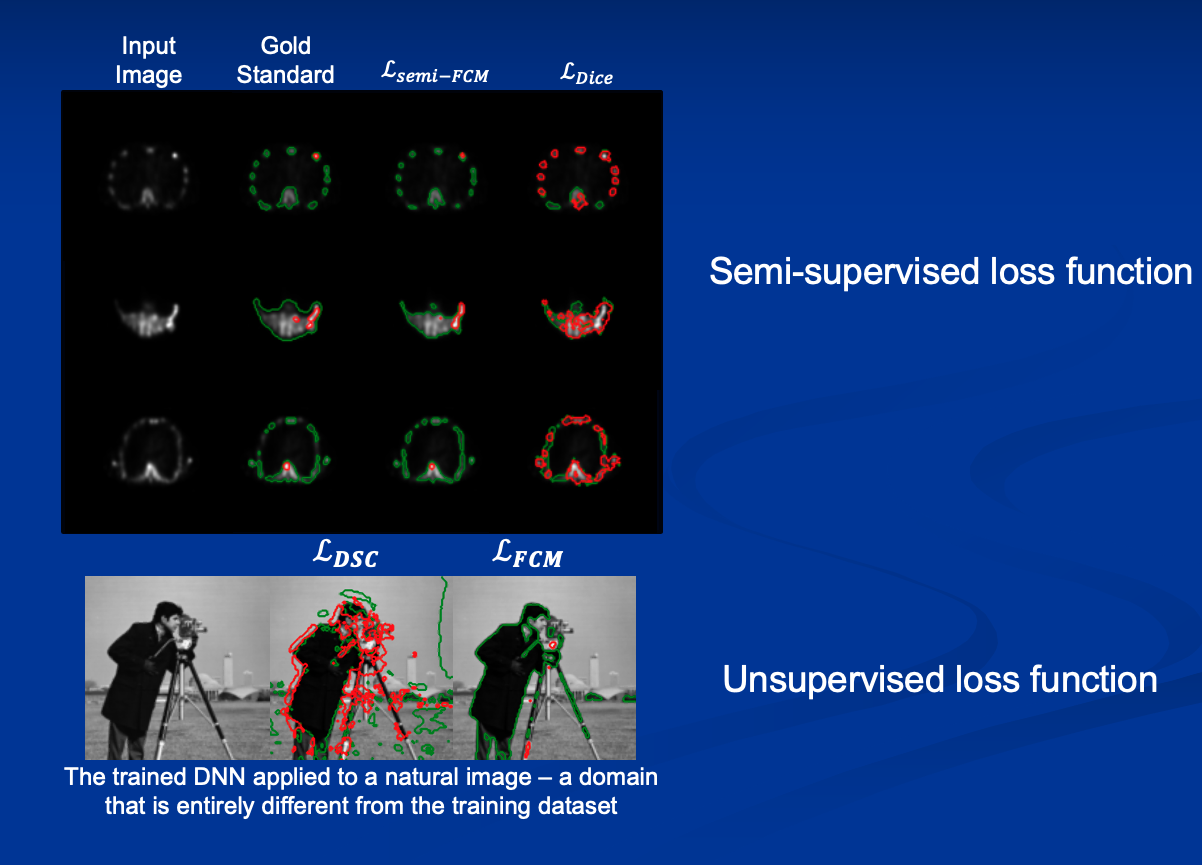

Semi-supervised learning for medical image segmentation

Deep learning has shown great success in medical image segmentation tasks, but it usually demands large datasets for training. For emerging imaging modalities, acquiring sufficient data can be difficult. We propose integrating classical unsupervised intensity clustering with deep learning to achieve accurate segmentation using significantly smaller datasets. This approach maintains robustness on unseen data while leveraging the speed of deep learning methods.

Many of those methods are available at GitHub. Please check “Links & Software” tab for details.

Dr. Chen is leading this project.

Grant support: P01CA27222 and JHU Discover award.